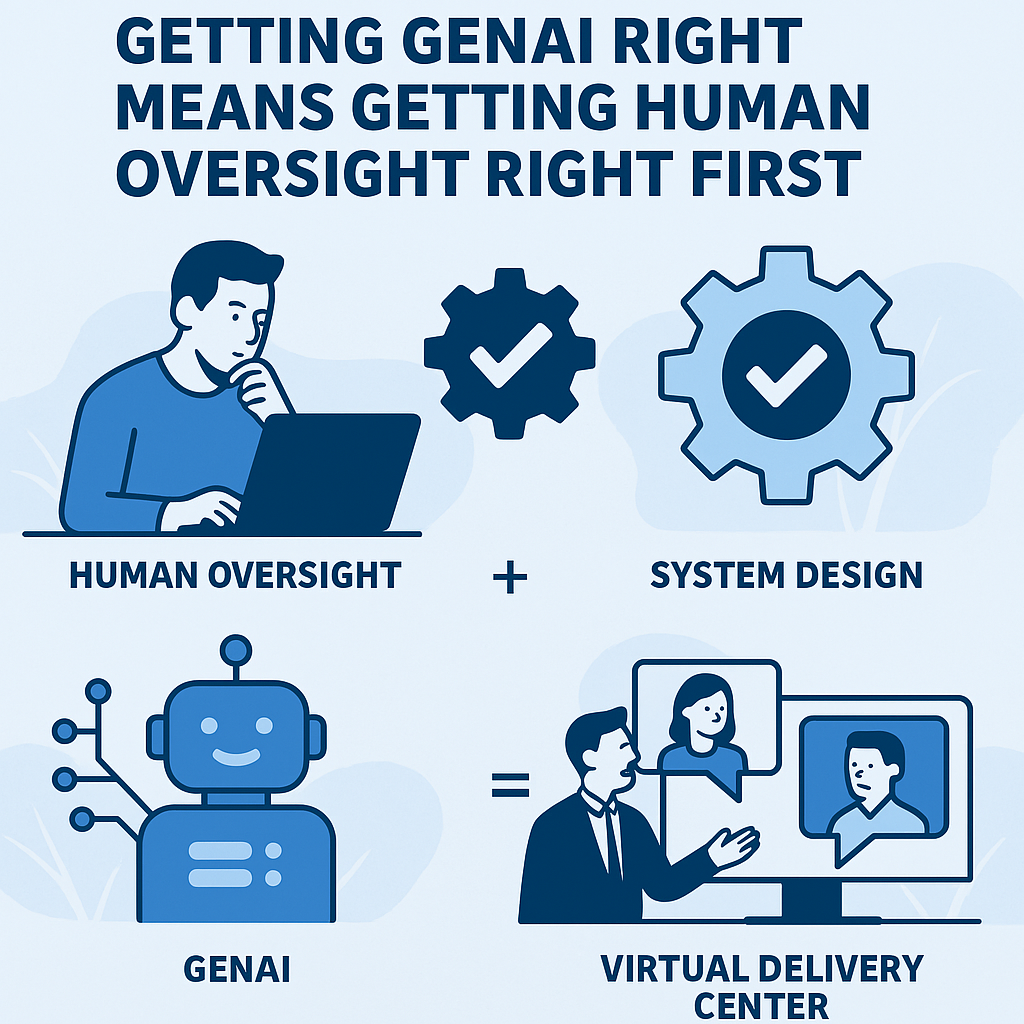

Getting Human Oversight Right: The Missing Key to Enterprise GenAI Success

In the race to adopt generative AI, most organizations fall into a familiar trap: they assume putting a human at the end of the pipeline will automatically catch every problem the machine misses. But “human in the loop” is not a silver bullet—it’s a broken promise when applied without design, training, or structure.

Oversight can fail not because people are absent, but because the system makes it easy to trust, hard to question, and nearly impossible to escalate. Think about how blindly we follow GPS instructions, even when they clearly lead us into dead ends or detours. That same complacency happens with GenAI—only the stakes are higher.

Oversight must be intentional. It must be part of the system’s DNA. And like the AI itself, it must be tested, evaluated, iterated, and evolved. Otherwise, organizations risk outsourcing judgment to machines and assuming a human will raise their hand—only to realize later that no one ever did.

Oversight systems collapse not in loud failures, but in quiet frictions:

Automation Bias makes people over-trust machines.

Missing Context forces humans to guess whether outputs are right.

No Counterevidence means only seeing one side of the story.

Escalation Hurdles—paperwork, ambiguity, or fear—stop humans from speaking up.

Vague Guidance results in reviews based on “vibes” rather than criteria.

Efficiency Pressure disincentivizes scrutiny in favor of speed.

These are not edge cases. They are everyday realities in systems deployed too fast, without enough scaffolding. The irony is brutal: AI is implemented to reduce errors—but without proper oversight, it increases them.

Organizations must treat oversight not as a checkbox but as a living architecture that prevents silent failures from becoming public disasters.

Human oversight only works if it’s engineered into the design of the GenAI solution—not duct-taped on during deployment. This begins at the use case definition stage.

Clarity on Use: What the system is for is just as important as what it's not for.

Embedded Reviews: Oversight checkpoints should be natively built into workflows.

Oversight Personas: Not everyone is qualified to review AI outputs. Assign roles with clear accountability.

Confidence Signaling: Systems should indicate how sure they are, and when they’re unsure, request human backup.

Scenario Testing: “What-if” cases must be simulated, including known failure points and escalation paths.

Treat oversight like software engineering. Don’t just build the AI—build the support beams that keep it honest, reliable, and humble.

Once the system is live, how do you know oversight is working?

Rejection Rates: Are users flagging errors? If no one is, either the system is perfect (unlikely) or oversight is asleep at the wheel.

False Confidence Signals: Are low-confidence outputs slipping through without review?

Escalation Triggers: How long does it take from a flagged issue to resolution?

Auditability: Can the organization trace back what happened, who reviewed it, and why a call was made?

These metrics should live inside your GenAI dashboard, not a separate spreadsheet. Oversight isn’t a legal defense—it’s a real-time quality control system. And like any system, it’s only as good as the telemetry it emits.

Even with perfectly designed workflows, oversight will fail if culture lags behind. You need a workforce that is trained to doubt—constructively.

Teach employees how GenAI works and doesn’t work.

Provide examples of subtle errors and “plausible lies” generated by AI.

Use sandbox environments where people can test their review instincts.

Create reward systems not just for usage, but for intervention.

Above all, normalize skepticism. A healthy tension between machine output and human judgment creates better outcomes. In the era of synthetic content and machine hallucinations, trust-but-verify isn’t a strategy—it’s survival.

The irony of AI oversight? GenAI can help solve the very problems it creates.

Use AI to flag low-confidence responses for human review.

Build GenAI “second opinions” that critique other GenAI outputs.

Deploy AI agents to summarize the pros and cons of each generated recommendation.

Let GenAI track human review behavior—flagging when reviewers grow complacent.

This human+AI loop is not about automating ethics. It’s about augmenting vigilance. With the right orchestration, machines can nudge humans into better oversight—not replace them.

At AiDOOS, we’ve embedded oversight into the heart of our Virtual Delivery Center (VDC) model. Here’s how:

Pre-Vetted Human Layers: Every VDC has domain experts who act as the human-in-the-loop across AI-integrated projects.

Modular Oversight Tracks: Oversight isn’t one-size-fits-all. We match risk-based levels of review with project sensitivity.

AI Governance Templates: Every VDC engagement includes GenAI-specific controls, escalation paths, and audit logs.

Continuous Risk Auditing: Oversight behavior is tracked and reported in every sprint or delivery cycle—not just at the end.

Oversight isn’t a service line. It’s the operating principle of responsible delivery. That’s why our VDCs are built to provide both velocity and vigilance—accelerating AI use while making trust a first-class citizen.

We’re entering a new era—not just of AI, but of accountability.

Every organization will eventually have to answer for the decisions its AI made. The good ones will show their oversight systems—the logs, the reviews, the triggers, the culture. The bad ones will point to a person at a desk and say, “We had a human there.”

That won’t be enough.

True oversight is designed, measured, practiced, and proven. It’s not a fallback—it’s a feature. And in a world where GenAI moves fast and breaks things, the ability to slow down and say, “Wait, let’s check that” may be your biggest competitive advantage.

For modern telecom enterprises, delivering exceptional QoS is no longer optional—it’s a brand differentiator and a strategic lever for growth. Static provisioning models won’t cut it in a world of hyper-dynamic data usage.